Way too long

Ain't nobody got the time for that!

Data-mining

So, I had an idea for a project... And now, 2 weeks later, its still dragging on... What is it? Well, its simple. Analyse and parse StackOverflow data.

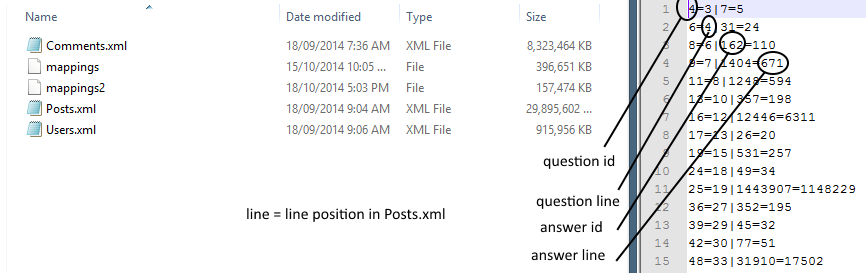

I've done some tedious and cpu-heating tasks in order to convert the stackoverflow posts data-dump into meta files with only the bare mappings: post id, line of post, answer id, line of answer.

It looks simple enough, but even with the actual post content stripped, and most other meta-data, this "minimalistic" meta descriptor still has a weight of 100MB.

In essence, it took 48 hours of "WTF" to extract data from a 28.5GB XML file to a simple line-by-line descriptor that's 100MB.

Since I plan on making this blog constantly updated, this will the first of a few rants involving time in technology in general (data-mining in this case) - sure computers are really fast and a few nanoseconds matter - but for larger projects, it sure takes a heck of a lot of time to accomplish something.

I'm using an external solution and it looks like I'm definitely stuck in the long haul. Of course, I can get other stuff done in this timeframe (and I will), but it goes to show. Technology isn't as fast or fancy as we think of it in the consumer scale.

It really puts into perspective on the power of Google's entire systems.

Rendering

Wow does rendering things take a lot of time. Even with YouTube videos, it's obvious that if you're trying to encode 30-minute gameplay footage or whatever:

it will still take quite sometime...

Which, is quite ridiculous considering the power of GPU's right now. I can watch WebGL demos at max settings in real-time, play Goat Simulator w/ Max Settings+1080p @60FPS, yet... Rendering a footage with some program that don't use a GPU TAKES AGES. And even worse, unlike with data-mining where the bottle neck is the hard-drive bandwidth, Rendering takes up 100% of your CPU, making any other tasks near-impossible to complete

CPU-based renderers are the bane of existence for humanity.

Disney renders "its new animated film on a 55,000-core supercomputer", where "To manage that cluster and the 400,000-plus computations it processes per day (roughly about 1.1 million computational hours)".

Which is absolutely mind-boggling. Of course, with Quantum computing, that same render will be solved in... ln10^6/ln2 hours=20 hours (I think). But using pre-existing technology, instead of using a CPU-based renderer, maybe try GPUs, or even ASICs? That might speed things up (a little).

My experience with rendering comes from a pretty little CPU-based renderer called "TrueSpace". The following scene took 2.6 days to render - and all just for 13 seconds worth of footage.

Installing Operating Systems

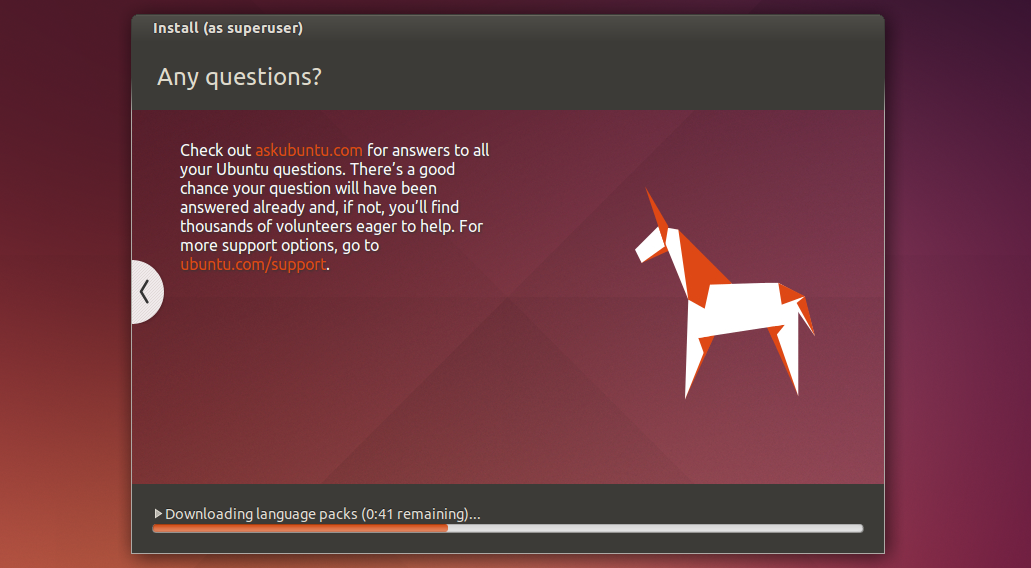

I install operating systems on regular basis. Regular meaning once every week. This week's addition was Ubuntu, it's 15 minutes in and already and I'm impatient.

Why? Well, not only did I have to download the Ubuntu 14.10 iso, but now Ubuntu's decided to download language packs and updates.

I've installed Mac OS X 10.10 and Windows 8.1 in the past few months. Yes, they're the most recent and shiniest operating systems, which are long obsolete by the time that you are reading this, but none of which has annoyingly decided to install additional "language packs", or to be frank, download anything at all. OS X installed like a breeze on the Solid State Drive, Windows too. But Ubuntu has to "download" some stuff before the installation is completed?

Mind you, Ubuntu trouts itself as a energy efficent and lightweight operating system, but through my lens, I see it as a operating system increasingly filled with bloat and invasive adware (such as with the amazon advertisment sitting right in the dock).

The downloads that delayed the installation time didn't even install the actual updates that are critical to Ubuntu, and hence a secondary step would have to be taken in order to update the operating system...

So I dislike installing Ubuntu, and really don't get why anyone would enjoy using it...

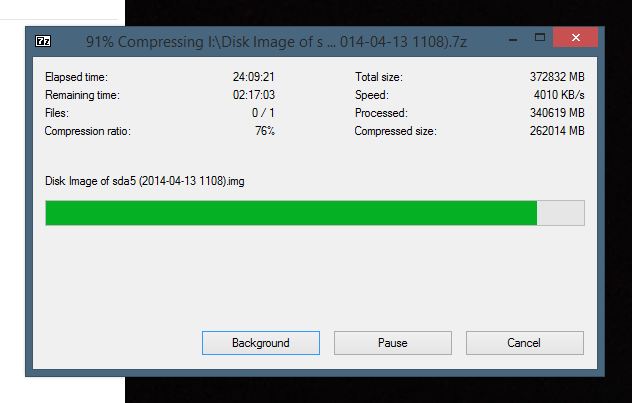

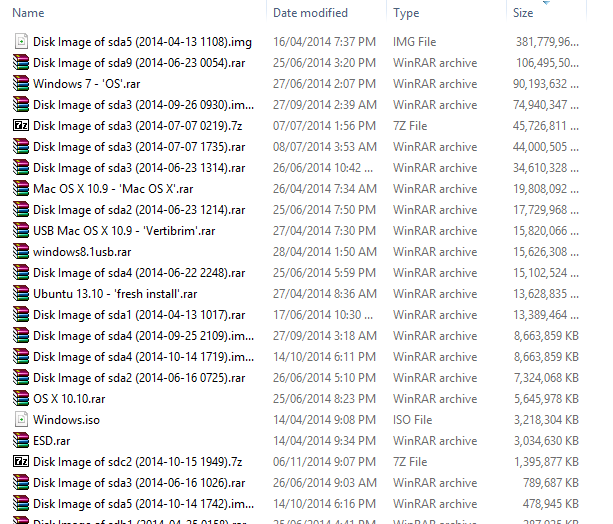

Backing up, Archiving and Testing ENTIRE HARD DRIVES.

This one is easy. I had a project where I was converting my notebook/laptop/minidesktop computer from the forsaken MBR format to GPT, because GPT is cool and apparently a 2011 laptop can support the partitioning type.

Suffice to say, to do so, you've got to backup the contents of your hard drive partitions first in order to keep the files.

And you end up with gigabytes upon gigabytes of data you're never going to see again (since they're backups).

The logical thing to do with these files is to archive them in order to achieve a good compression ratio. I'm going to regret it later since I chose 7zip over winrar for the compression ration (since winrar has a recovery record), but heck does it take a long time to image a partition and then archiving it...

In the short term, doing something like this is great... But there's always that rare chance of a byte going wrong and ruining your day! (and possibly even year).

I'll illustrate this fear and make a bonus analogy to minesweeper below (hint, each and every file has the possibility of fragmenting and corrupting the drive).

Using 7zip, zip, tar, tar+gz, or any other format without a recovery record system increases your chances of recieving corrupted data in the long-term.

Over-time, your external hard-drive will degrade until a point where a few bytes will get corrupted. The corrupted bytes will be the "bomb" that ends the game. With CRC32, or any other checksum (MD5, SHA256 are my favourite at present) can help find this "bomb", so you're at a variable distance away from a corrupted byte. But you don't actually want to touch this corrupted byte because it might end the file. In archives where the checksum determines nearly everything, most importantly whether or not the file has succeeded being extracted, that "bomb" can ruin your archive, you don't want to have your archive on the segment of the hard drive with corruption or else it's practically all over.

In short, it aint fun archiving 100GB+ worth of data.

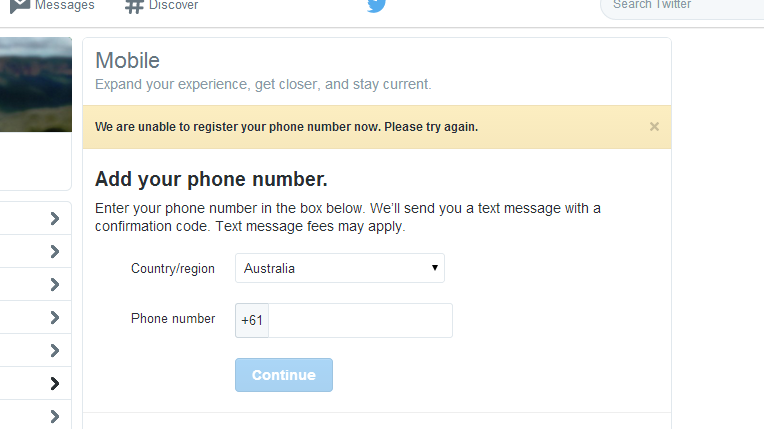

Getting Twitter's phone activation thing to work...

This is taking too long and I hate it. What was on Twitter's mind when they decided that phone activation is necessary to create a twitter app?

Don't they want more developers, not less?

Oh wait. Twitter is a company that loses money. Forgot...

Don't tell me that "We are unable to register your phone number now. Please try again."

6 tries and 6 SMS messages received with the same activation code still doesn't make things work? You done goofed, Twitter.

Rendering

So "rendering" is fun. But rendering with a high-end system during Summer really sucks, you know. It's like taking a bath in dirt. Sure it's practical for birds - and mud cools you down - but for humans?

All I want to do is to see what I have created, and it's really not possible to do so without rendering the project (since RAM Preview only shows about 100 frames).

This is a 4K web render - It's no joke... I've done plenty of these the past few years, but this time around, there's fancy blurs, filters, effects, layers added into the mix.

I don't even think that the "28 hours" remaining progress is telling the truth either - I had a script which makes rendering more complex each frame you progress to the end. It's going to change, and 40 hours+ seems reasonable.

And don't forget that I haven't even seen a final output yet - Not even a draft - So it's likely that I've got to change it, and re-render the whole thing.

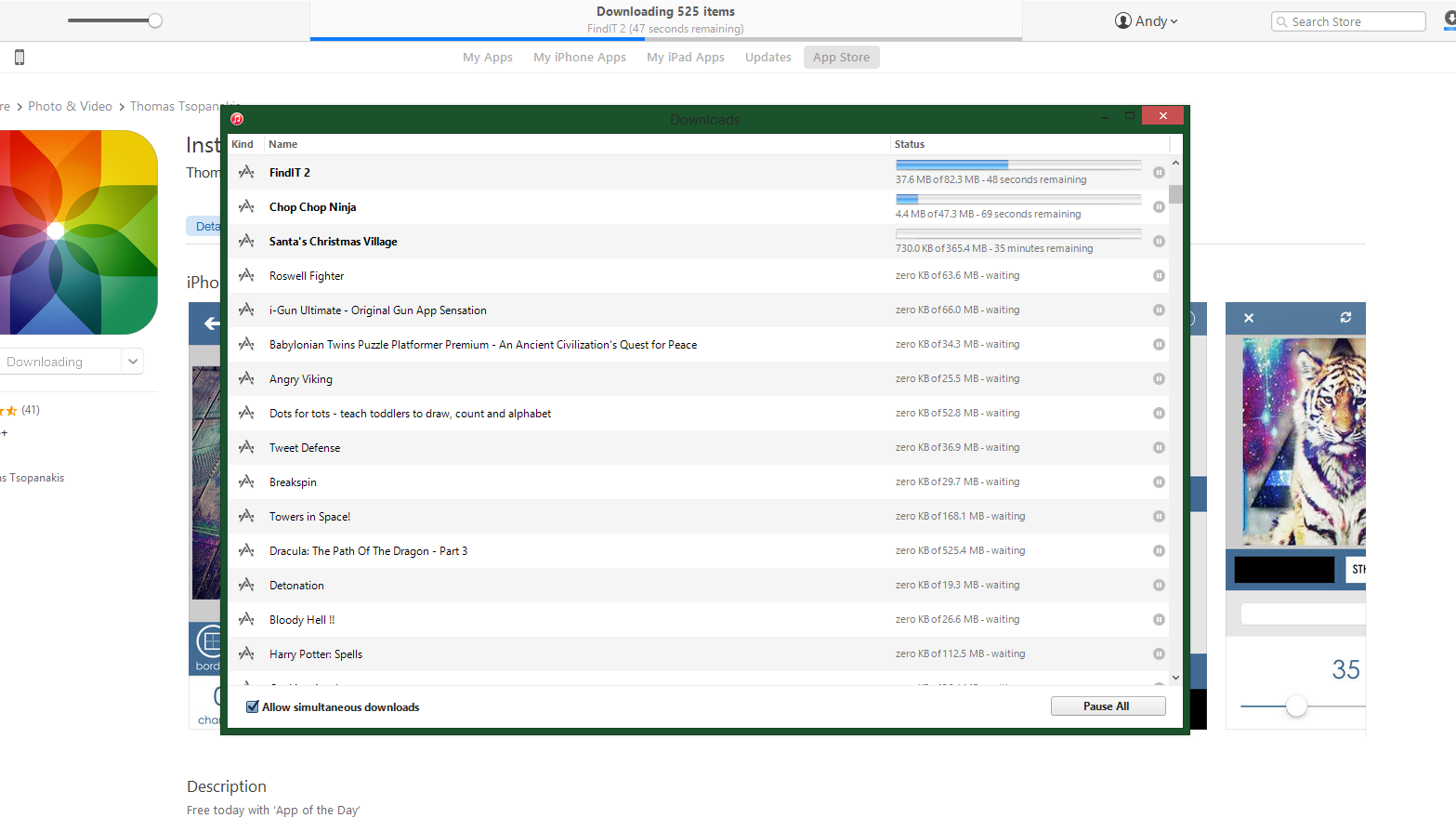

Downloading iTunes Apps

iTunes has this annoying tendancy to force you to download apps that you've once decided to purchase, but never actually bothered to download - like Steam, where you end up with a backlog of games that you'll never play.

I wanted to download one app. Yes, just the single one, and iTunes decided to hook me up on my liberal app downloading past and download 500 or so apps.

I don't even want these apps, they're going to be on my computer for no good reason at all, and yet iTunes insists that I should download it.

So that's what I'm doing right now, and it's going to be taking way too long...

Building Android

All I wanted to do was to remotely connect to my computer. And I have to build Android to do so?

Building Android has taken 3 hours already, and hasn't completed. I guess it's my fault that I tried it on a 4 year old i7 laptop (i7 720QM).

Webkit/Blink by so far has been the only thing that appears to be compiling, and I think that I attempted to compile Google Chrome previously (for transparency in Node-Webkit), so I'm blaming the long compile time on Google Chrome.